Seven Things You have got In Widespread With Deepseek Ai

페이지 정보

작성자 Shawna 작성일25-02-22 21:20 조회38회 댓글0건관련링크

본문

As Andy emphasized, a broad and free Deep seek range of fashions provided by Amazon empowers clients to choose the exact capabilities that best serve their distinctive wants. By way of performance, R1 is already beating a spread of other models including Google’s Gemini 2.Zero Flash, Anthropic’s Claude 3.5 Sonnet, Meta’s Llama 3.3-70B and OpenAI’s GPT-4o, in keeping with the Artificial Analysis Quality Index, a well-adopted impartial AI analysis rating. 3. When evaluating mannequin performance, it is recommended to conduct multiple checks and average the outcomes. With AWS, you need to use DeepSeek-R1 fashions to construct, experiment, and responsibly scale your generative AI ideas by using this powerful, cost-environment friendly mannequin with minimal infrastructure investment. With Amazon Bedrock Guardrails, you can independently consider consumer inputs and mannequin outputs. Updated on 1st February - You need to use the Bedrock playground for understanding how the mannequin responds to varied inputs and letting you effective-tune your prompts for optimal outcomes.

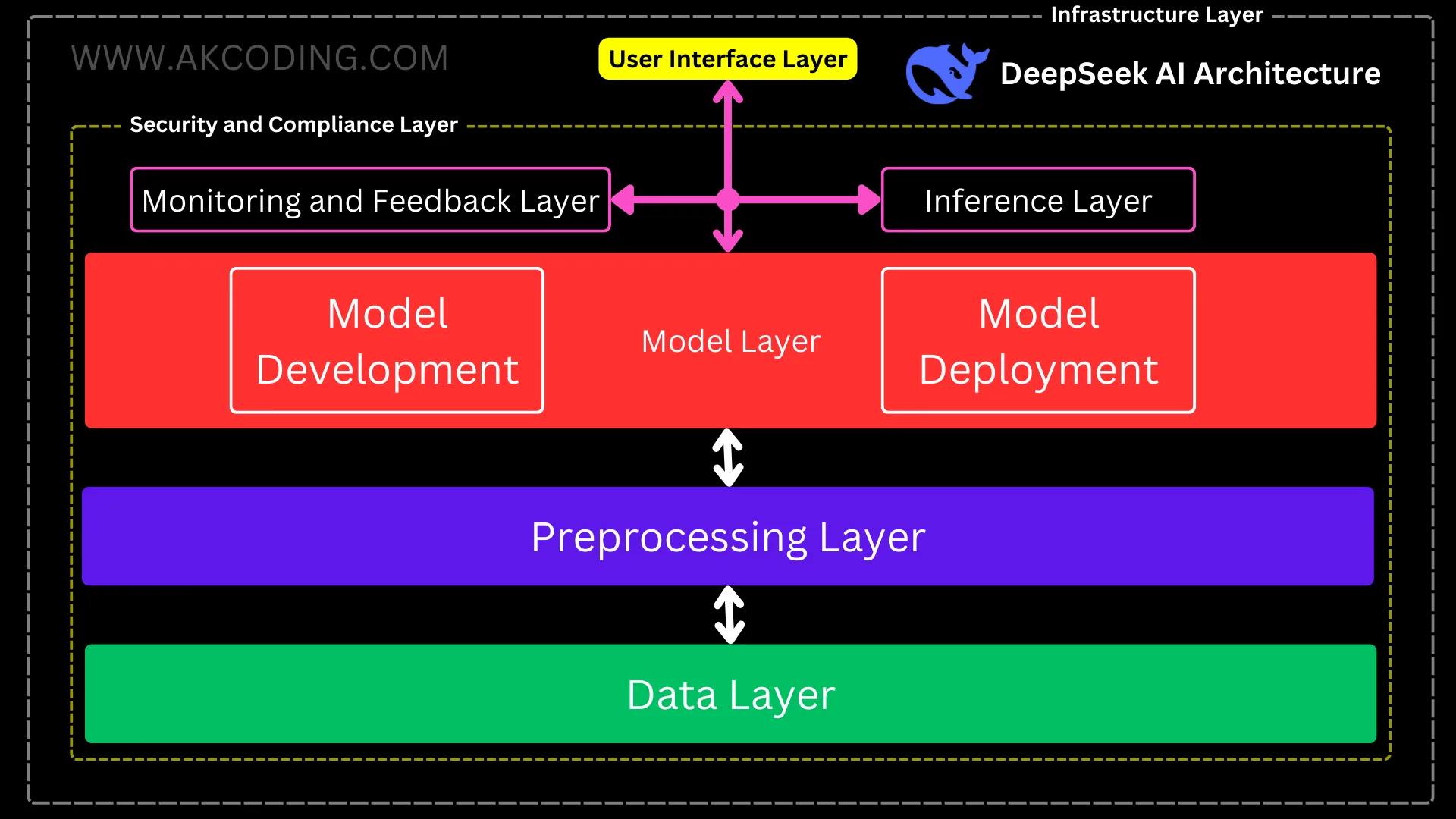

Let’s dive in and see how you can easily set up endpoints for models, explore and evaluate LLMs, and securely deploy them, all whereas enabling sturdy model monitoring and upkeep capabilities in production. No point out is made from OpenAI, which closes off its fashions, except to indicate how DeepSeek compares on performance. DeepSeek AI’s determination to open-source each the 7 billion and 67 billion parameter versions of its models, together with base and specialized chat variants, goals to foster widespread AI research and business functions. "If you ask it what mannequin are you, it might say, ‘I’m ChatGPT,’ and the most likely reason for that's that the training knowledge for Deepseek free was harvested from hundreds of thousands of chat interactions with ChatGPT that were simply fed instantly into DeepSeek’s training knowledge," stated Gregory Allen, a former U.S. Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat - these open-source models mark a notable stride forward in language comprehension and versatile utility.

Let’s dive in and see how you can easily set up endpoints for models, explore and evaluate LLMs, and securely deploy them, all whereas enabling sturdy model monitoring and upkeep capabilities in production. No point out is made from OpenAI, which closes off its fashions, except to indicate how DeepSeek compares on performance. DeepSeek AI’s determination to open-source each the 7 billion and 67 billion parameter versions of its models, together with base and specialized chat variants, goals to foster widespread AI research and business functions. "If you ask it what mannequin are you, it might say, ‘I’m ChatGPT,’ and the most likely reason for that's that the training knowledge for Deepseek free was harvested from hundreds of thousands of chat interactions with ChatGPT that were simply fed instantly into DeepSeek’s training knowledge," stated Gregory Allen, a former U.S. Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat - these open-source models mark a notable stride forward in language comprehension and versatile utility.

DeepSeek-R1 achieves state-of-the-artwork results in varied benchmarks and presents both its base fashions and distilled variations for neighborhood use. Data security - You should utilize enterprise-grade safety features in Amazon Bedrock and Amazon SageMaker that will help you make your data and applications secure and private. This can really feel discouraging for researchers or engineers working with limited budgets. In their analysis paper, DeepSeek’s engineers said they'd used about 2,000 Nvidia H800 chips, that are less superior than the most chopping-edge chips, to train its mannequin. Most Chinese engineers are eager for their open-source projects to be utilized by overseas firms, especially those in Silicon Valley, partially as a result of "no one within the West respects what they do as a result of every thing in China is stolen or created by dishonest," mentioned Kevin Xu, the U.S.-primarily based founder of Interconnected Capital, a hedge fund that invests in AI. DeepSeek and the hedge fund it grew out of, High-Flyer, didn’t instantly respond to emailed questions Wednesday, the beginning of China’s extended Lunar New Year holiday.

DeepSeek-R1 achieves state-of-the-artwork results in varied benchmarks and presents both its base fashions and distilled variations for neighborhood use. Data security - You should utilize enterprise-grade safety features in Amazon Bedrock and Amazon SageMaker that will help you make your data and applications secure and private. This can really feel discouraging for researchers or engineers working with limited budgets. In their analysis paper, DeepSeek’s engineers said they'd used about 2,000 Nvidia H800 chips, that are less superior than the most chopping-edge chips, to train its mannequin. Most Chinese engineers are eager for their open-source projects to be utilized by overseas firms, especially those in Silicon Valley, partially as a result of "no one within the West respects what they do as a result of every thing in China is stolen or created by dishonest," mentioned Kevin Xu, the U.S.-primarily based founder of Interconnected Capital, a hedge fund that invests in AI. DeepSeek and the hedge fund it grew out of, High-Flyer, didn’t instantly respond to emailed questions Wednesday, the beginning of China’s extended Lunar New Year holiday.

DeepSeek’s chatbot’s reply echoed China’s official statements, saying the relationship between the world’s two largest economies is considered one of an important bilateral relationships globally. China stays tense however crucial," part of its answer stated. The startup Zero One Everything (01-AI) was launched by Kai-Fu Lee, a Taiwanese businessman and former president of Google China. There is sweet purpose for the President to be prudent in his response. For a lot of Chinese, the Winnie the Pooh character is a playful taunt of President Xi Jinping. DeepSeek’s chatbot said the bear is a beloved cartoon character that's adored by countless kids and families in China, symbolizing joy and friendship. Does Liang’s latest assembly with Premier Li Qiang bode effectively for DeepSeek’s future regulatory surroundings, or does Liang want to consider getting his own crew of Beijing lobbyists? The core of DeepSeek’s success lies in its advanced AI fashions. The success here is that they’re related among American know-how companies spending what's approaching or surpassing $10B per 12 months on AI fashions. Observers are wanting to see whether the Chinese company has matched America’s leading AI companies at a fraction of the fee. See the official DeepSeek-R1 Model Card on Hugging Face for further particulars.

댓글목록

등록된 댓글이 없습니다.